As AI reshapes our tech landscape in 2024, the importance of DevOps becomes increasingly evident. Here's why DevOps remains crucial:

- Rapid Integration and Deployment: DevOps ensures agile, efficient integration and deployment of evolving AI technologies.

- Facilitates quick adaptation to AI advancements.

- Enables effective deployment of AI solutions.

- Enhanced Team Collaboration: The collaborative essence of DevOps is vital for AI projects.

- Fosters communication between development, operations, and AI teams.

- Aligns AI initiatives with business goals.

- Quality Assurance and Reliability: Essential in AI applications.

- Continuous testing through CI/CD methodologies.

- Guarantees robust and quality AI solutions.

- Security in AI Implementations: DevOps integrates security throughout the development lifecycle.

- Essential for AI systems handling sensitive data.

- Maintains compliance and protects against breaches.

- Cost Efficiency and Resource Optimization: Particularly important for resource-intensive AI projects.

- Streamlines development processes, reducing waste.

- Maximizes resource utilization for better ROI.

In summary, DevOps in 2024 is not just relevant but indispensable for the effective management and success of AI-driven projects. It ensures agility, quality, security, and efficiency, making it a cornerstone in the realm of AI-driven technological advancements.

DevOps Tools: 🔧

This week, we talk about Trivy as our featured 'Tool of the Week.' Trivy is an open-source vulnerability scanner specifically designed for container images, file systems, and even Git repositories. It's a tool that has swiftly gained popularity for its simplicity and effectiveness in the DevOps toolkit.

What sets Trivy apart is its ease of use. Unlike other scanners that require pre-configuration and extensive setup, Trivy is ready to go right after installation. It's as simple as running a single command to scan your container images, making it accessible even for those who are just venturing into the realm of container security.

Trivy's comprehensive database is another feather in its cap. It scans for vulnerabilities in OS packages and various application dependencies, ensuring a thorough check. This database is regularly updated, keeping pace with the ever-evolving landscape of security threats.

Integration with CI/CD pipelines is seamless, allowing teams to automate the scanning process and catch vulnerabilities early in the development cycle. This integration is crucial for maintaining a robust security posture without sacrificing the speed and efficiency that DevOps is known for.

Following are a couple of links to learn more about Trivy

DevOps How-Tos: 📘

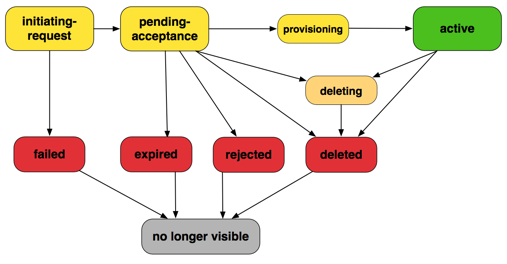

In this week in DevOps How-Tos lets see how to setup up cross-account VPC peering using Terraform.

Cross-account VPC peering is a networking connection between two Virtual Private Clouds (VPCs) that reside in different AWS accounts. This connection allows for the routing of traffic between the VPCs using private IP addresses, effectively enabling direct network communication across AWS accounts as if the resources were within the same network.

Doing it using Infrastructure as Code like Terraform adds an additional layer of complexity. It can be achieved by executing the following steps.

- Configure AWS Providers: Define two providers in Terraform, one for each AWS account (Account A and Account B).

- Define or Identify VPCs: Either define new VPCs or use data sources to reference existing VPCs in both accounts.

- Request VPC Peering: In Account A, create a VPC peering connection request to the VPC in Account B.

- Accept VPC Peering: In Account B, accept the VPC peering connection request from Account A.

- Update Route Tables: Add routes to the route tables in both VPCs to allow traffic to flow between the peered VPCs.

- Execute Terraform Configuration: Run

terraform initandterraform applyto apply the configuration or run the iac pipeline.

This process establishes a network connection between VPCs in different AWS accounts, enabling communication across account boundaries.

For the code and the documentation please refer to the following links

DevOps Concepts: 🧠

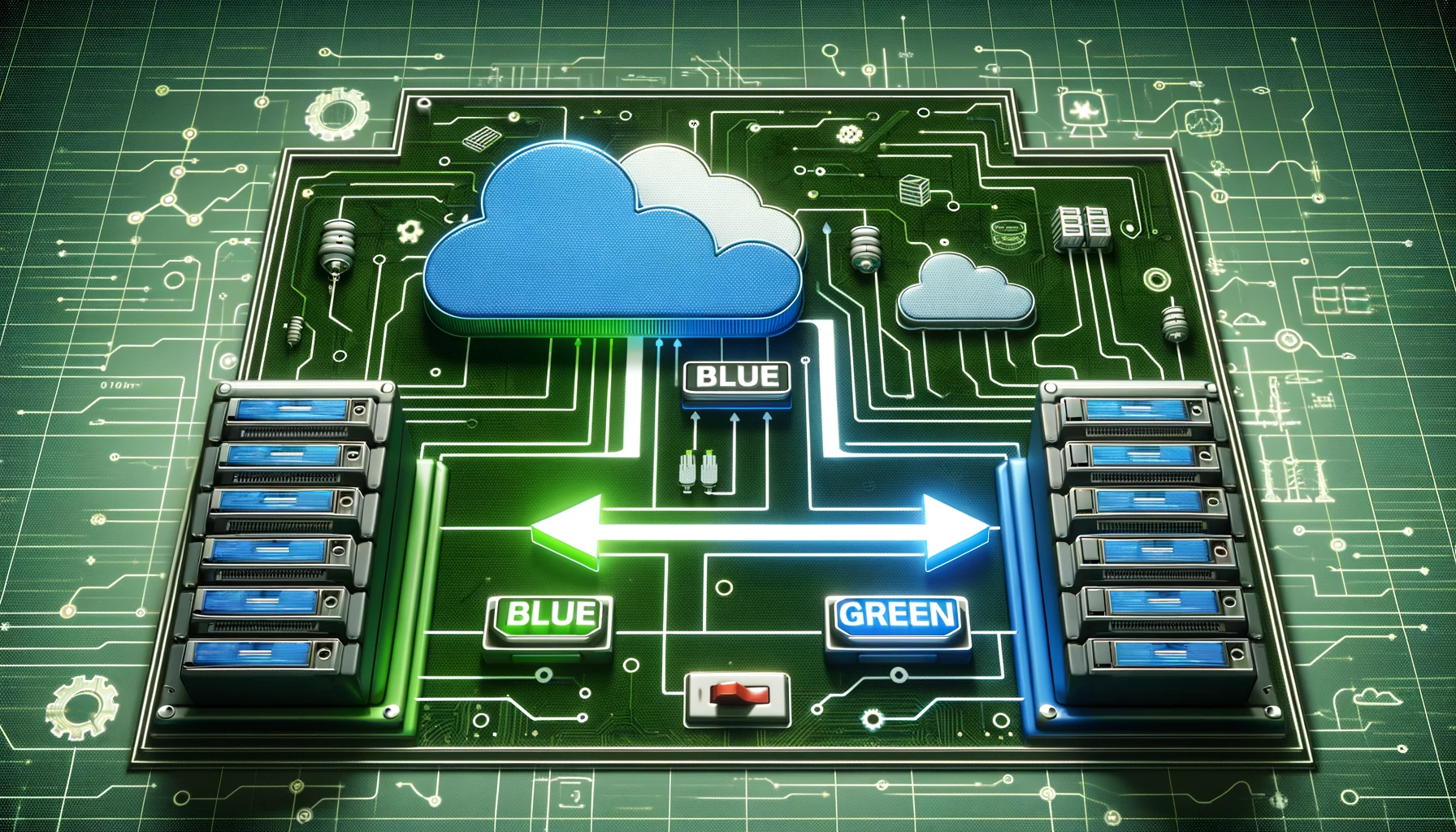

This week let's find out more about the DevOps concept Blue Green deployment

- Definition: Blue-Green deployment is a strategy in DevOps for updating applications with minimal downtime and risk. It involves two identical environments, one Blue (active) and one Green (idle).

- Environments: The Blue environment runs the current application version, while the Green environment gets the new version. Both environments are identical in terms of hardware and software configuration.

- Testing: The Green environment, with the new version, is thoroughly tested and evaluated. This ensures that any bugs or issues are resolved before it goes live.

- Switching Traffic: Once the Green environment is stable and ready, traffic is gradually or instantly rerouted from Blue to Green. This switch can be achieved using techniques like DNS changes or load balancer reconfiguration.

- Rollback Plan: If issues arise post-deployment, traffic can be quickly rerouted back to the Blue environment, ensuring service continuity and minimal impact on users.

- Advantages: This method greatly reduces downtime and risk as the new version is fully tested in a production-like environment before the switch. It also provides a quick rollback mechanism.

- Use Case: Ideal for mission-critical applications where uptime and stability are paramount. It's commonly used in cloud-native and high-availability environments.

- Challenges: Requires double the resources as two complete environments are needed. Coordination and precise execution are crucial for successful implementation.

Refer to these links for more details

DevOps Resources: 📚

💡💡💡 Have you ever faced these questions related to your DevOps processes?

📜 Is there a comprehensive list of DevOps best practices?

📊 How do I measure the proficiency of DevOps processes?

⚠️ What are the common pitfalls and antipatterns of DevOps?

⏳ Which DevOps process should I implement first?

📈 How do I measure the maturity of my DevOps processes?

🚀 Last week, AWS announced the AWS Well-Architected Framework DevOps Guidance, which can help answer a lot of these questions. 🚀

A comprehensive set of DevOps best practices, metrics to measure the effectiveness of your DevOps processes and common antipatterns and pitfalls to avoid.

This DevOps guidance introduces a few concepts to organise and categorise these best practices.

🌟 Sagas:- The guidance uses sagas, using which these best practices are segregated into different themes.

The guidance talks about the following sagas.

🧩 Organisational Adoption

🧩 Development Lifecycle

🧩 Quality Assurance

🧩 Automated Governance

🧩 Observability

🌟 Capabilities:- Each of these sagas is further broken down into capabilities, which provide granular subgroups of best practices for each of these sagas

🌟 Indicators:- Each of the capabilities has indicators or best practices you could implement. These indicators are categorised as FOUNDATIONAL, RECOMMENDED or OPTIONAL, which helps you prioritise implementing these best practices.

🌟 Metrics:- The guidance provides metrics that you can use to measure the proficiency of your DevOps processes

🌟 Antipatterns:- The guidance also lists common pitfalls to avoid while implementing your DevOps processes.

🎯 Who can benefit from this Well-Architected Framework for DevOps Guidance?

This Well-Architected Framework DevOps Guidance is a goldmine for anyone involved in designing, implementing, measuring, or maintaining DevOps practices. Whether you're a seasoned pro or just getting started, this resource has something to offer you. 🙌👩💻👨💻

DevOps Events: 🗓️

This week we talk about AWS reInvent which took place from November 27th to December 1st, 2023, in Las Vegas, Nevada:

Overall, it was a massive event with over 2,000 sessions, keynotes, innovation talks, builder labs, workshops, tech and sustainability demos, and even featured attendees trying their hand as NFL quarterbacks!

Here are some of the key highlights:

- Amazon Q: Introducing a new type of generative AI assistant specifically designed for work. This assistant can help with tasks like writing emails, creating presentations, and summarizing documents.

- Next-generation AWS-designed chips: Announcing new custom processors that will power Amazon EC2 instances, offering significant performance and cost improvements.

- Focus on Sustainability: Showcasing new features and initiatives to help customers reduce their environmental impact when using AWS cloud services.

- Machine Learning advancements: Several announcements were made around machine learning, including Amazon SageMaker enhancements, new AI-powered data tools, and a focus on responsible AI development.

- Security and privacy: New security features and tools were announced, along with a continued emphasis on data privacy and protection.

- Developer tools: Several new developer tools and services were announced, making it easier for developers to build and deploy applications on AWS.

Out of the thousands of videos from the event this week we take a look at this video where you can learn about how to create end-to-end CI/CD pipelines using infrastructure as code on AWS.

Community Spotlight: 💡

This section is meant for community Q&A and community voice. So if you are reader of this newsletter tell us about yourself. If you have something to say or share, please let us know and we might feature you in this section.

Last but not the Least: 🎬

Is it Dev? Is it Ops? It is DevOps!